School of Education

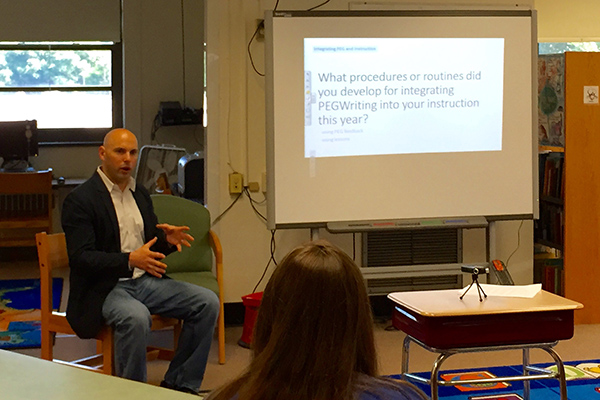

Joshua Wilson studies teachers’ use of essay-scoring software

“Can we write during recess?” Some students were asking that question at Anna P. Mote Elementary School, where teachers were testing software that automatically evaluates essays for University of Delaware researcher Joshua Wilson.

Wilson, assistant professor in UD’s School of Education in the College of Education and Human Development, asked teachers at Mote and Heritage Elementary School, both in Delaware’s Red Clay Consolidated School District, to use the software during the 2014-15 school year and give him their reaction.

Wilson, whose doctorate is in special education, is studying how the use of such software might shape instruction and help struggling writers.

The software Wilson used is called PEGWriting (which stands for Project Essay Grade Writing), based on work by the late education researcher Ellis B. Page and sold by Measurement Incorporated, which supports Wilson’s research with indirect funding to the University.

The software uses algorithms to measure more than 500 text-level variables to yield scores and feedback regarding the following characteristics of writing quality: idea development, organization, style, word choice, sentence structure, and writing conventions such as spelling and grammar.

The idea is to give teachers useful diagnostic information on each writer and give them more time to address problems and assist students with things no machine can comprehend – content, reasoning and, especially, the young writer at work.

Writing is recognized as a critical skill in business, education and many other layers of social engagement. Finding reliable, efficient ways to assess writing is of increasing interest nationally as standardized tests add writing components and move to computer-based formats.

The National Assessment of Educational Progress, also called the Nation’s Report Card, first offered computer-based writing tests in 2011 for grades 8 and 12 with a plan to add grade 4 tests in 2017. That test uses trained readers for all scoring.

Other standardized tests also include writing components, such as the assessments developed by the Partnership for Assessment of College and Careers (PARCC) and the Smarter Balanced Assessment, used for the first time in Delaware this year. Both PARCC and Smarter Balanced are computer-based tests that will use automated essay scoring in the coming years.

Researchers have established that computer models are highly predictive of how humans would have scored a given piece of writing, Wilson said, and efforts to increase that accuracy continue.

However, Wilson’s research is the first to look at how the software might be used in conjunction with instruction and not as a standalone scoring/feedback machine.

For more information on Wilson’s research, see the full UDaily article here.